Yeah, I’m currently using that one, and I would happily stick with it, but it seems just AMD hardware isn’t up to par with Nvidia when it comes to ML

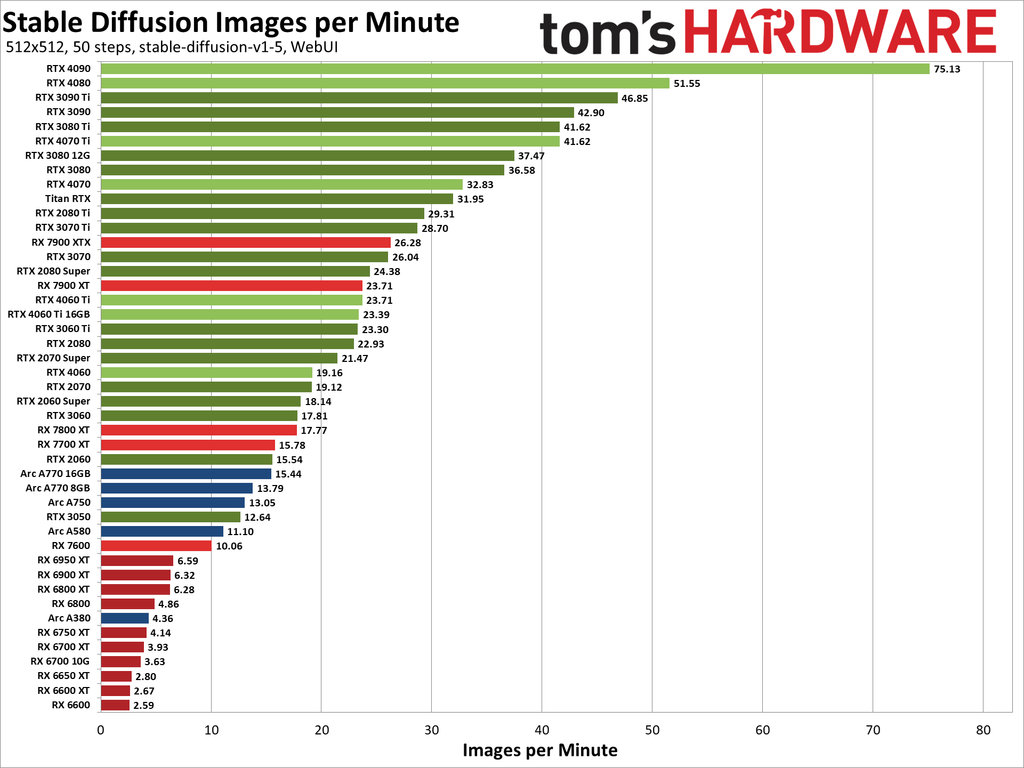

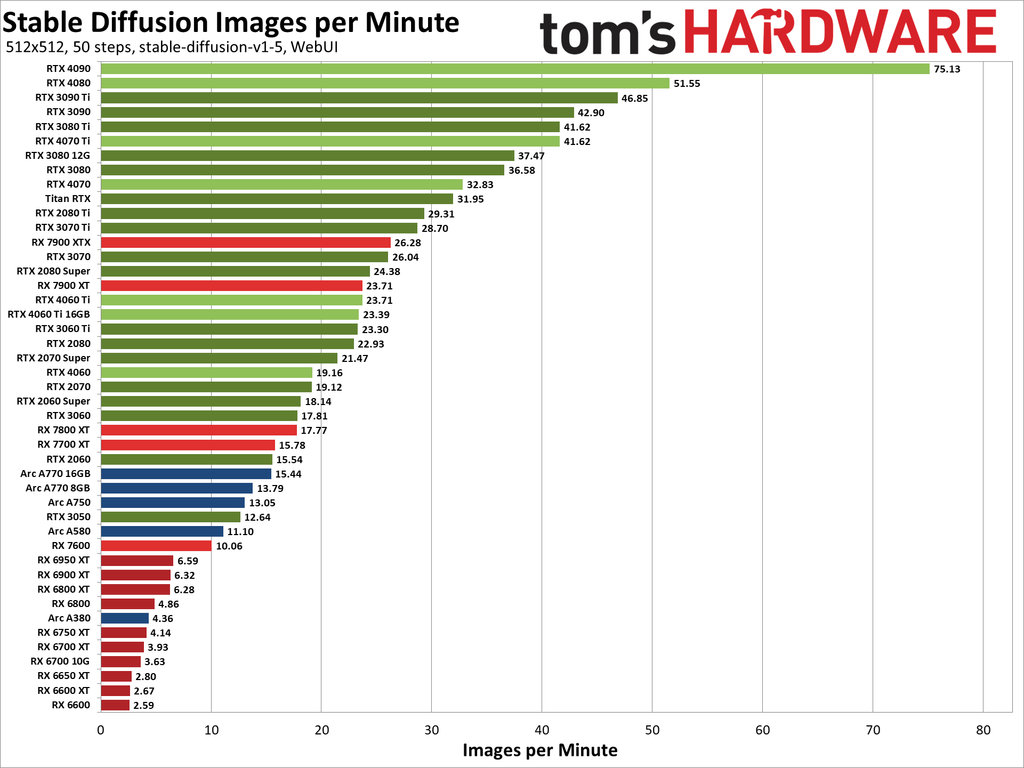

Just take a look at the benchmarks for stable diffusion:

Yeah, I’m currently using that one, and I would happily stick with it, but it seems just AMD hardware isn’t up to par with Nvidia when it comes to ML

Just take a look at the benchmarks for stable diffusion:

Now I’m actually considering that one as well. Or I’ll wait a generation I guess, since maybe by then Radeon will at least be comparable to NVIDIA in terms of compute/ML.

Damn you NVIDIA

Yeah, was just reading about it and it kind of sucks, since one of the main reasons I wanted to go Wayland was multi-monitor VRR and I can see it is also an issue without explicit sync :/

Interesting thought, maybe it’s a mix of both of those factors? I mean, I remember using AI to work with images a few years back when I was still studying. It was mostly detection and segmentation though. But generation seems like a natural next step.

But definitely improving image generation doesn’t suffer a lack of funding and resources nowadays.

I mean, we didn’t choose it directly - it just turns out that’s what AI seems to be really good at. Companies firing people because it is ‘cheaper’ this way(despite the fact, that the tech is still not perfect), is another story tho.

For Logitech devices there is also Solaar.

You can check if it has the functionality you want (not sure, since I haven’t used it much and only for basic stuff).

Meh. Just a few segments, that’s all and not a full season ;-; One can dream though

Thinking about it from your point of view, maybe MS was right and Linux is a cancer too. Technically it behaves similarly to systemd, since most Unixes are actually Linuxes nowadays (excluding BSD ofc, but they are still in the minority, similarly to alternatives to systemd). It even is a binary blob as well!

Should every distro use/develop a different kernel? Should we focus our resources on providing alternatives and again have a multitude of different Unix versions, every incompatible with each other? Isn’t it better that we have this solid foundation and make it as good as it can be?

Overall I think standardization of init is not so bad, just like adopting the Linux kernel was. It is actually quite nice that you can hop from distro to distro and know what to expect from such a basic thing as init process.

Anyways, in Linux land you actually have a possibility to replace it. Granted, it is not as easy, however there are plenty of distros that allow you to ditch systemd in favor of something else.

Yeah, I think most of the times, if you don’t run very sensitive enterprise grade machine there isn’t much point to it.

Maybe run it once in a while if you really want to.

Agreed. I’d say with open source it is harder to ‘get away’ with malicious features, since the code is out in the open. I guess if authors were to put those features, open nature of their code also serves as a bit of a deterrent sice there is a much bigger possibility of people finding out compared to closed source. However as you said it is not impossible, especially since not many people look through the code of everything they run. And even then it is not impossible to obfuscate it well enough for it not to be spotted on casual read-through.

I do periodic backups of my system from live usb via Borg Backup to a samba share.

Could be both of those things as well.