To whoever mentioned Librewolf previously as a better alternative to FireFox: Thank you.

To whoever mentioned Librewolf previously as a better alternative to FireFox: Thank you.

Thanks for sharing. It looks like a great other option.

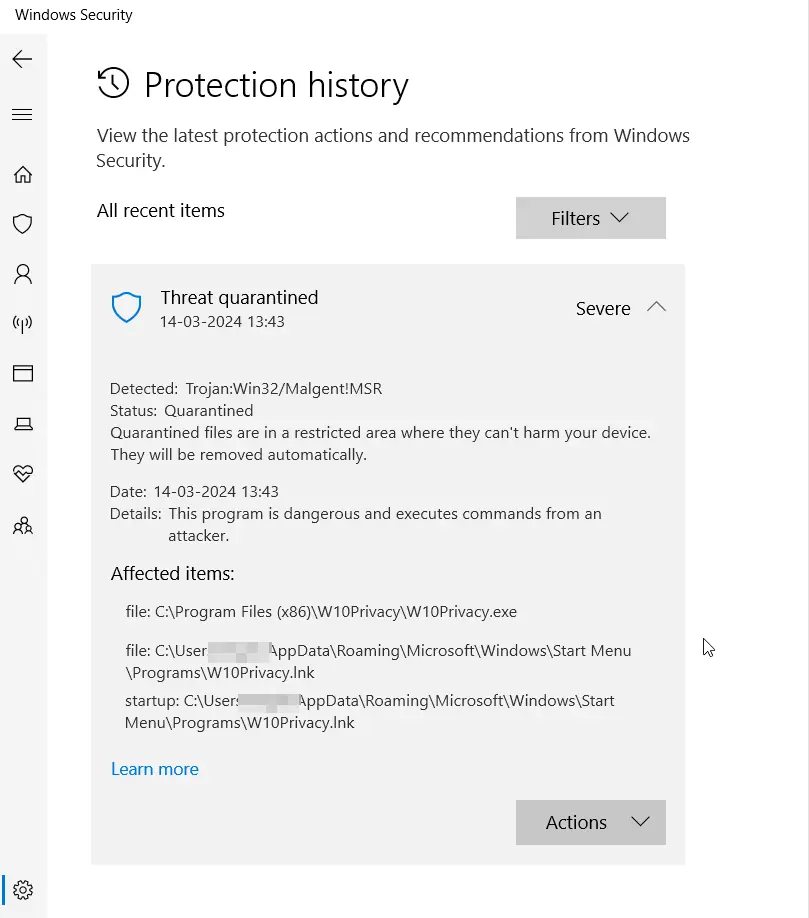

Edit: Darn. It is recognised as a trojan:

I spent the better half of 45 minutes writing and revising my comment. So thank you sincerely for the praise, since English is not my first language.

ShutUpWin10 and AppBuster from German O&O?

Interesting timing. The EU has just passed the Artificial Intelligence Act, setting a global precedent for the regulation of AI technologies.

What is it?

Key Takeaways:

Why Does This Matter in the US?

Banned applications: The new rules ban certain AI applications that threaten citizens’ rights, including biometric categorisation systems based on sensitive characteristics and untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases. Emotion recognition in the workplace and schools, social scoring, predictive policing (when it is based solely on profiling a person or assessing their characteristics), and AI that manipulates human behaviour or exploits people’s vulnerabilities will also be forbidden.

Sources:

Yeah, I haven’t read good things about Onyx either. Chinese-inside™. Scummy business practices.

I prefer Kobo as an alternative.

Here’s a summary:

Unbait ttle: “Ukraine Gains Upper Hand in Electronic Warfare Against Russia”

In the ongoing conflict, Ukraine has effectively countered Russia’s electronic warfare (EW) capabilities. Initially at a disadvantage, Ukrainian forces have developed their EW strategies to successfully disrupt Russian electronic operations, crucially affecting the course of battles. The article highlights the significance of EW in modern warfare and underscores the urgency for the US military to revitalize its EW capabilities, drawing lessons from the Ukrainian experience.

Summarised with ChatGPT.

Or a Nokia Streaming Box

Odd thumbnail, OK informative video.

13 dog breeds and mixed breeds are banned in Denmark: https://en.foedevarestyrelsen.dk/animals/animal-welfare/danish-legislation-on-dogs as one example.

You raise a fair point. Hiding downvotes could help avoid bandwagon negativity, as users may be less inclined to pile on additional downvotes. However, I still believe transparency should take priority over these concerns.

Showing the full picture - both upvotes and downvotes separately - allows users to more accurately judge content quality and community sentiment. I think we should trust users to make reasoned judgments, rather than hide data from them. A slight potential increase in negativity seems a small price to pay for maintaining transparency and accountability on Lemmy.

Edit: I do agree that displaying a relative percentage, as you mention, could serve as a compromise.

Thank you for the explanation ❤️

My hidden read posts in Sync comes back, when I refresh. Maybe a bug -_-

This missing feature is absolutely crucial for transparency.

Big tech industries are hiding downvotes, which obfuscates the true value of shared content in the eyes of the users. I was excited to see Lemmy natively going against this trend, and saddened to see that it’s not featured in sync (yet?).

Separated votes are a crucial Lemmy feature if you ask me. Hoping that this will be implemented as an option in Sync.

Thank you for this approximate description of most Nordic countries.