Money is fake, scarcity is manufactured, capitalism is a scam

Unfortunately a lot of people have bought into the scam…

Three raccoons in a trench coat. I talk politics and furries.

Other socials: https://ragdollx.carrd.co/

Money is fake, scarcity is manufactured, capitalism is a scam

Unfortunately a lot of people have bought into the scam…

Started my psychology course this year and to be perfectly honest the more I hear about psychoanalysis the more it just sounds like a bunch of bullshit made up by some horny guy who was full of himself

I would like to propose some changes to that title:

Microsoft CEO’s pay rises 63% to $79m,

despite[because of] devastating year for layoffs: 2550jobs lost[employees were fired by their greedy CEO] in 2024 [because he wanted more money]

Conservatives have already said that they want to inspect children’s genitals, so it’s only a matter of time until they start saying that they want to regularly inspect women’s genitals as well to “protect unborn children” (read: control women and fulfill their sick fetish)

But Biden is shaking his fist very angrily! That has to count for something! /s

If by tasteful you mean furry vore…

Then yeah, tasteful.

I remember when scientists were more focused on making AI models smaller and more efficient, and research on generative models was focused on making GANs as robust as possible with very little compute and data.

Now that big companies and rich investors saw the potential for profit in AI the paradigm has shifted to “throw more compute at the wall until something sticks”, so it’s not surprising it’s affecting carbon emissions.

Besides that it’s also annoying that most of the time they keep their AIs behind closed doors, and even in the few cases where the weights are released publicly these models are so big that they aren’t usable for the vast majority of people, as sometimes even Kaggle can’t handle them.

9/11

Edit: At first I thought the joke was related to those “who did 9/11” memes that I see all the time on the internet, but only afterwards did I realize the date is actually 9/11 (hadn’t seen any posts online about it yet and we don’t talk about it at all in Brazil)

idk if that puts me ahead of or behind the curve…

deleted by creator

But I couldn’t find a credible source for the “showing children pornography” portion you mentioned

It’s from one of the kid’s testimony in the first link (it starts with “Now, when you first saw the suitcase, where was it in that room.”), where he talks about how Michael showed him and his brother some porn mags. The first time he was hanging out with MJ while he was putting on makeup, and MJ picked up a suitcase with the porn mags and showed the kid one of the pictures. In the second occasion the kid can’t recall if he or his brother brought up the suitcase or if MJ did, but he says that they were all looking at the magazines together for “30 minutes to an hour”.

Regarding the books specifically, it’s one of those elements that on their own could be interpreted as just MJ being kinda weird. We know the first book was a gift given the fan’s inscription, and it’s fairly reasonable to assume that the second one was a gift too. As the blog post points out the third book wasn’t brought up in court and wasn’t with the other two books, but it’s still reasonable to assume that it belonged to MJ. To me the way the blog author tries to “soften” the book definitely points to some bias in their part:

The third book, that was confiscated in 1993 In Search of Young Beauty: A Venture Into Photographic Art (Charles Du Bois Hodges, 1964) which contains both boys and girls, mostly dressed, but some nude or semi-nude.

If we squint a bit we could just chalk these up to MJ possibly being a nudist or being a bit weird. I certainly think it’s odd that he had books with pictures of nude children in them, and even liked one of them enough to inscribe his own message in it, but if only the books were brought up in court I certainly wouldn’t think that’s enough evidence to convict someone. But given the whole context and other elements of the accusations I’m not willing to give MJ that much benefit of the doubt.

Definitely not the impression I got from everything I’ve read. The whole sleeping in the same bed with kids, keeping books with naked pictures of children, showing porn to kids (perhaps the most common method predators use to try to groom children), and the whole thing with setting up an alarm around his bedroom.

Keep in mind that a lot of the information I’ve seen was from a Wordpress blog bent on defending Jackson in any and every way possible, and yet I still think the case they make is not really convincing. The author speculates about what “true grooming” looks like, and why MJ’s actions supposedly don’t fit their personal expectations of grooming. They also try to justify him keeping those books with pictures of naked kids as if that was a normal thing to do.

Maybe if it was just one of these things it could still be justified as MJ just being weird. But it’s all off these things, a clear pattern of behavior and accusations for which the simplest explanation is that MJ was indeed a predator. What I can say is that if these accusations were directed at some random dude down the road I definitely wouldn’t want my kids going near him.

Looked up her name on Twitter to see what people were saying about this, that was a mistake 🙄

A lot of people seem to hate her for whatever reason, she was far from perfect, but all things considered I think she did fine as CEO and I never got the hate. It can’t be easy to manage a company as big and complex as YouTube.

A year ago or so I would have said Clash Royale. But now… nah.

You’ll find plenty of videos that explain this better than I can, but at this point the devs clearly hate the community, the game is more pay to win than ever, evolutions are completely broken and the devs barely care to balance the game anymore.

It’s pretty obvious that right now they just want to squeeze as much money as possible from the game before it completely dies out.

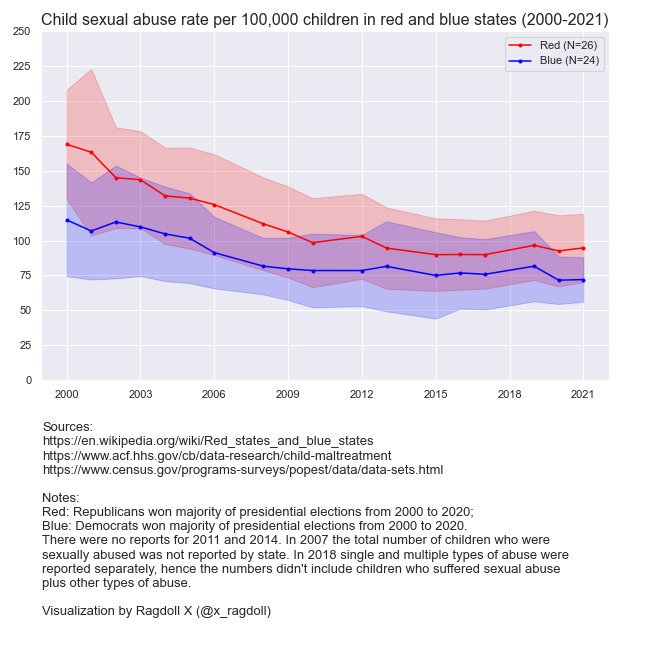

What’s the Y axis for the middle graph?

That’s the percentage of kids who’ve reported some kind of sexual violence.

Also only having 3 data points in such a brief window doesn’t really say much.

I disagree because it’s not really just about these YRBS surveys, it’s the whole pattern. When we consider how conservatives are the only ones voting in favor of child marriage, and how pundits and randos on the internet will defend teen pregnancy, even if it was just one survey that showed a difference between red and blue states that would just be confirmation of a pattern that’s already pretty obvious, and we should seriously ask why their ideology leads to this kind of stuff, and how to remedy it. Even if it’s just a 2% point increase, this means that hundreds of thousands of children could be saved from abuse if conservatism was less prevalent.

Finally the grouping metric of “won majority of presidential elections from 2000 to 2020” isn’t clear and isn’t necessarily reflexive of policy. A more appropriate metric might be the party of the governor or the majority parties of their chambers.

There’s really no definitive metric for “red” vs. “blue” states, so while presidential election results will obviously reflect the politics of the people in that state, I do agree that it’s not a thorough measure - but this same pattern holds even when using other measures of political affiliation.

I say this because I have some additional context here, as these graphs are part of an article I’m writing about the “pedocon” theory, and I can tell you that this same pattern shows up regardless of how we measure politics or CSA. Whether it’s polling on how many people identify as Republicans vs. Democrats, or liberals vs. conservatives, or left-wing vs. right-wing, this correlation is still there. Looking at governor or chambers specifically could be an interesting addition, but I fully expect the same pattern to hold.

Ages ago I posted a meme to r/PoliticalCompassMemes about how Lauren Boebert was happy to become a grandma at 36 when her 17 year old son had a kid, which is obviously very weird and unhealthy. Like clockwork, a lot of ‘AuthRight’ flairs came to the comments to defend teen pregnancy. You can see the post here if you really want to have a look at the hellhole that is PCM: https://redd.it/11n2z00

So yeah, it pretty much is a feature for them.

First graph is all reported child sexual abuse cases that were substantiated. You can see the full tables in the ACF website, and in 2021 specifically there were 59,328 CSA cases in the U.S. that were substantiated.

The percentage of kids who’ve officially reported sexual abuse actually seems to be decreasing considering not only the decrease you see in the graph (and it decreased further in 2022 to 59,044 cases), but also because in the Youth Risk Behavior Survey the percentage of teens who said they’ve experienced some kind of sexual violence increased from 9.7% in 2017 to 11% in 2021, and for rape specifically it went from 7.4% to 8.5%.

And another important reminder:

I’ve seen some people on Twitter complain that their coworkers use ChatGPT to write emails or summarize text. To me this just echoes the complaints made by previous generations against phones and calculators. There’s a lot of vitriol directed at anyone who isn’t staunchly anti AI and dares to use a convenient tool that’s avaliable to them.

Removed by mod